VGG Model

Table of Contents

The VGG Model, short for Visual Geometry Group Model, is a convolutional neural network architecture that has gained popularity for its exceptional performance in image recognition tasks. Developed by the Visual Geometry Group at the University of Oxford, the VGG Model has been widely used in various computer vision applications, including image classification, object detection, and image segmentation.

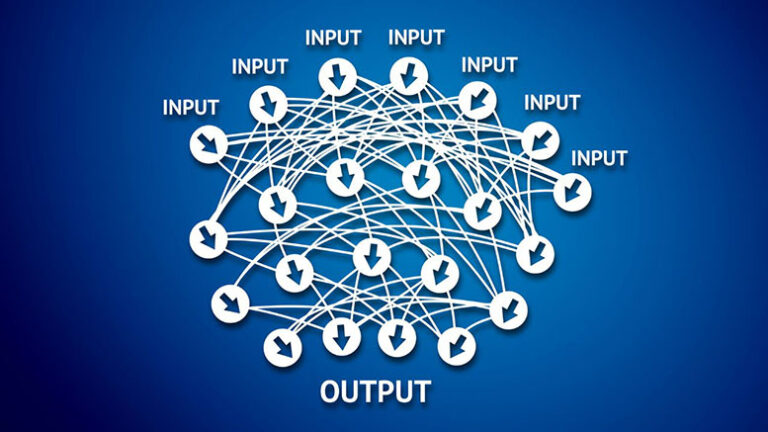

Introduction to Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are a type of deep learning algorithm that is specifically designed to process visual data. They are made up of several layers that perform operations such as convolution, pooling, and non-linear activation to extract features from images.

CNNs have revolutionized the field of computer vision and have become the go-to choice for tasks such as image classification, object detection, and image generation.

The VGG Model Architecture

The VGG Model is known for its simplicity and effectiveness in image recognition tasks. It consists of a series of convolutional and pooling layers, followed by fully connected layers that perform the final classification.

The original VGG Model, VGG16, has 16 layers, while a deeper version, VGG19, has 19 layers. These models have shown impressive performance on benchmark datasets such as ImageNet, achieving top rankings in image classification competitions.

Key Features of the VGG Model

One of the key features of the VGG Model is its use of small 3×3 convolutional filters with a stride of 1. This allows the model to learn more complex features by stacking multiple layers of convolutions.

The VGG Model also uses max pooling layers to downsample the feature maps and reduce the computational complexity of the network.

Additionally, the VGG Model uses rectified linear unit (ReLU) activation functions to introduce non-linearity into the network and improve its ability to learn complex patterns in the data.

Training and Fine-Tuning the VGG Model

Training a VGG Model from scratch can be computationally expensive due to its deep architecture and large number of parameters. However, pre-trained versions of the VGG Model are available that have been trained on large datasets such as ImageNet. These pre-trained models can be fine-tuned on a smaller dataset to adapt them to a specific task, such as classifying a new set of images.

Applications

The VGG Model has been used in a wide range of applications, including image classification, object detection, and image segmentation. In image classification tasks, the VGG Model has shown high accuracy in distinguishing between different categories of objects in images.

In object detection tasks, the VGG Model can be used to detect and localize objects within an image. In image segmentation tasks, the VGG Model can be used to segment an image into different regions based on its content.

Challenges and Future Directions

While the VGG Model has shown impressive performance in image recognition tasks, it is not without its limitations. One of the main challenges of the VGG Model is its computational complexity, which can make it difficult to train and deploy on resource-constrained devices.

Future research directions for the VGG Model include exploring ways to reduce its computational complexity while maintaining its performance, as well as adapting the model for new tasks and datasets.

Conclusion

The VGG Model is a powerful convolutional neural network architecture that has gained widespread popularity for its exceptional performance in image recognition tasks. With its simple yet effective design, the VGG Model has become a go-to choice for researchers and practitioners working in the field of computer vision.

By understanding the key features and applications of the VGG Model, we can harness its capabilities to tackle a wide range of image recognition challenges and drive advancements in the field of artificial intelligence.