Scientists use AI facial analysis to predict cancer survival outcomes

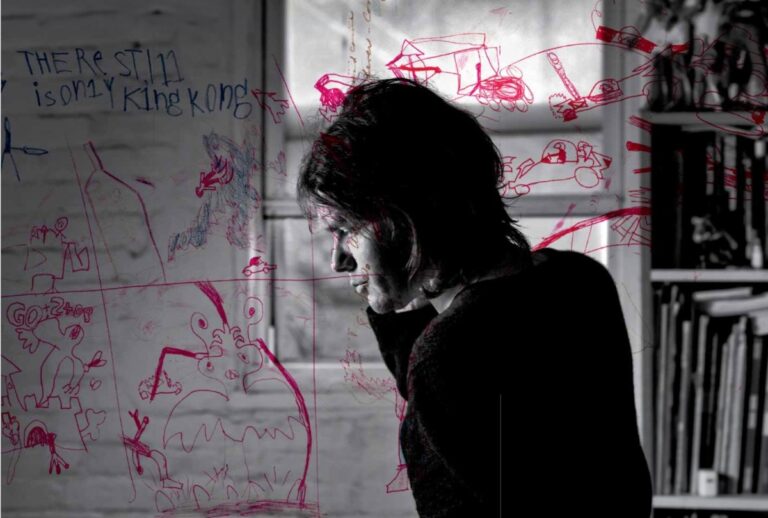

Scientists have used artificial intelligence analysis of the faces of cancer patients to predict survival outcomes and in some cases outperform clinicians’ short-term life expectancy forecasts.

The researchers used a deep learning algorithm to measure the biological age of subjects and found that the features of cancer sufferers appeared on average about five years older than their chronological ages.

The new technological tool, known as FaceAge, is part of a growing push to use estimates of ageing in bodily organs as so-called biomarkers of potential disease risks. Advances in AI have boosted these efforts because of its ability to learn from large health data sets and make risk projections based on them.

The research showed the information derived from pictures of faces could be “clinically meaningful”, said Hugo Aerts, co-senior author of a paper on the study published in Lancet Digital Health on Thursday.

“This work demonstrates that a photo like a simple selfie contains important information that could help to inform clinical decision-making and care plans for patients and clinicians,” said Aerts, director of AI in Medicine at Massachusetts-based Mass General Brigham.

“How old someone looks compared to their chronological age really matters — individuals with FaceAges that are younger than their chronological ages do significantly better after cancer therapy”, he added.

The scientists trained FaceAge on 58,851 photos of presumed healthy people from public data sets. They then tested the algorithm on 6,196 cancer patients, using photos taken at the start of radiotherapy.

Among the cancer patients, the older the FaceAge, the worse the survival outcome, even after adjusting for chronological age, sex and cancer type. The effect was especially pronounced for people who appeared over 85.

The scientists then asked 10 clinicians and researchers to predict whether patients receiving palliative radiotherapy for advanced cancers would be alive after six months The human assessors were right about 61 per cent of the time when they had access only to a patient photo, but that improved to 80 per cent when they had FaceAge analysis too.

Possible limitations of FaceAge include biases in the data and the potential for readings to reflect errors in the model rather than actual differences between chronological and biological age, the research team said.

The scientists are now testing the technology on a wider range of patients, as well as assessing its ability to predict diseases, general health status and lifespan.

The study of biomarkers for ageing is a subject of intense research activity. In February, scientists unveiled a simple blood test to detect how fast internal organs age and help flag increased risks for 30 diseases, including lung cancer.

Perceived ageing has emerged as a potential predictor of mortality and several age-related diseases, researchers say. The drawback is generating the data by human observation is time-consuming and costly.

The evaluation of FaceAge appeared to be “quite thorough”, said Jaume Bacardit, a Newcastle University AI specialist who has done work applying the technology to perceived ageing.

But there needed to be more explanation of how the AI technique worked, to check for potential distorting factors, he added.

“That is, which parts of the face are they basing their predictions on?” Bacardit said. “This will help identify potential confounders that may go undetected otherwise.”