AI Bias

Artificial Intelligence (AI) has become an integral part of our daily lives, from virtual assistants like Siri and Alexa to recommendation systems on platforms like Netflix and Amazon. While AI has the potential to revolutionize industries and improve efficiency, there is a growing concern about bias in AI systems. AI bias refers to the unfair or discriminatory treatment of certain groups of people based on their race, gender, or other characteristics.

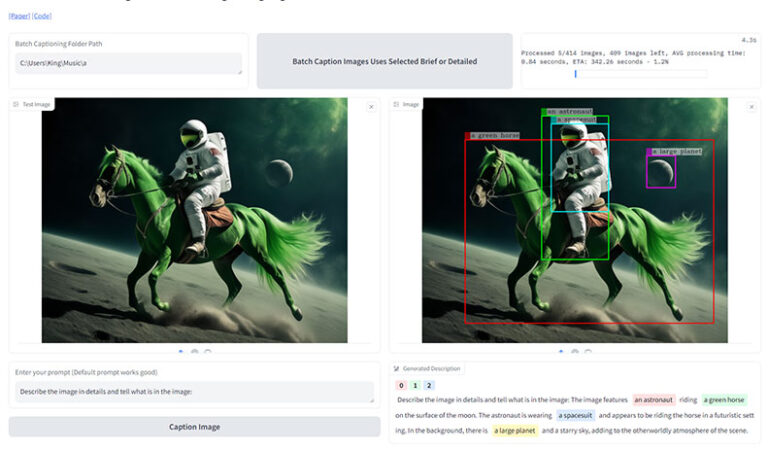

In recent years, there have been numerous cases of AI bias causing harm and perpetuating discrimination. For example, a study by ProPublica found that a widely used software program for predicting future criminal behavior was biased against African Americans, leading to higher rates of false positives for this group. Similarly, facial recognition technology has been shown to have higher error rates for people of color and women, leading to misidentification and potential harm.

AI Bias in Hiring and Recruitment

One area where AI bias has been particularly problematic is in hiring and recruitment. Many companies use AI algorithms to screen job applicants and identify the best candidates for a position. However, these algorithms can be biased against certain groups, leading to discrimination in the hiring process.

For example, a study by researchers at the University of Chicago found that an AI system used by a large tech company was biased against women, penalizing resumes that included the word “women’s” or references to women’s organizations. This bias resulted in fewer female candidates being selected for interviews, perpetuating gender inequality in the workplace.

To address this issue, companies must be vigilant in monitoring and testing their AI systems for bias. This includes ensuring that the training data used to develop the algorithm is diverse and representative of the population, as well as implementing checks and balances to prevent discrimination in the decision-making process.

AI Bias in Criminal Justice

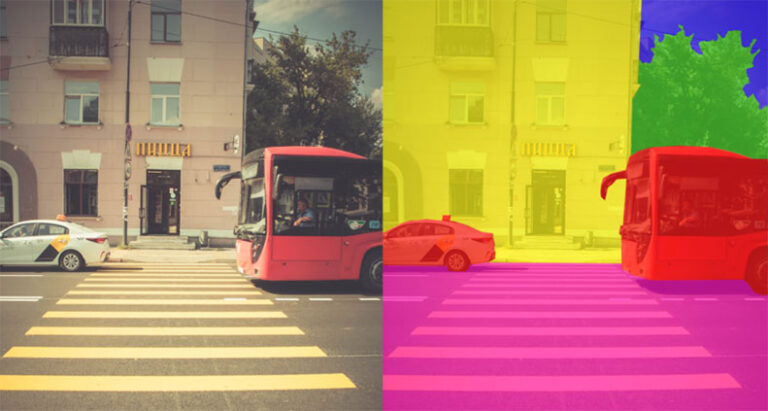

Another area where AI bias has raised concerns is in the criminal justice system. Many law enforcement agencies use predictive policing algorithms to identify areas with high crime rates and allocate resources accordingly. However, these algorithms have been shown to be biased against minority communities, leading to over-policing and increased rates of incarceration for people of color.

In addition, some judges use AI systems to help determine the risk of recidivism for defendants and make decisions about bail and sentencing. These systems can be biased against certain groups, leading to harsher penalties for people of color and perpetuating racial disparities in the criminal justice system.

To address this issue, policymakers and law enforcement agencies must be transparent about the use of AI in the criminal justice system and implement safeguards to prevent bias and discrimination. This includes regularly auditing and testing AI systems for bias, as well as providing training and education to judges and law enforcement officials on the potential harms of AI bias.

Mitigating AI Bias

While AI bias is a complex and pervasive issue, there are steps that can be taken to mitigate its impact. One key strategy is to increase diversity and representation in the development and implementation of AI systems. By including a diverse group of stakeholders in the design and testing process, companies can identify and address potential biases before they cause harm.

In addition, companies should be transparent about the use of AI algorithms and provide explanations for how decisions are made. This can help to build trust with users and ensure accountability in the decision-making process.

Furthermore, policymakers must enact regulations and guidelines to govern the use of AI systems and prevent discrimination. This includes requiring companies to conduct bias audits and assessments of their AI algorithms, as well as providing recourse for individuals who have been harmed by biased decisions.

In conclusion, AI bias is a pressing issue that requires attention and action from all stakeholders. By understanding the causes and consequences of bias in AI systems, we can work together to build more equitable and inclusive technologies for the future.