Ensemble Learning

Ensemble Learning (EL) is a machine learning technique that combines multiple models to make more accurate predictions. The basic idea behind EL is that by combining the predictions of multiple models, the errors of individual models can be minimized, leading to better overall performance.

EL can be applied to a wide range of machine learning algorithms, including decision trees, neural networks, support vector machines, and more.

There are several different approaches to Ensemble Learning, including:

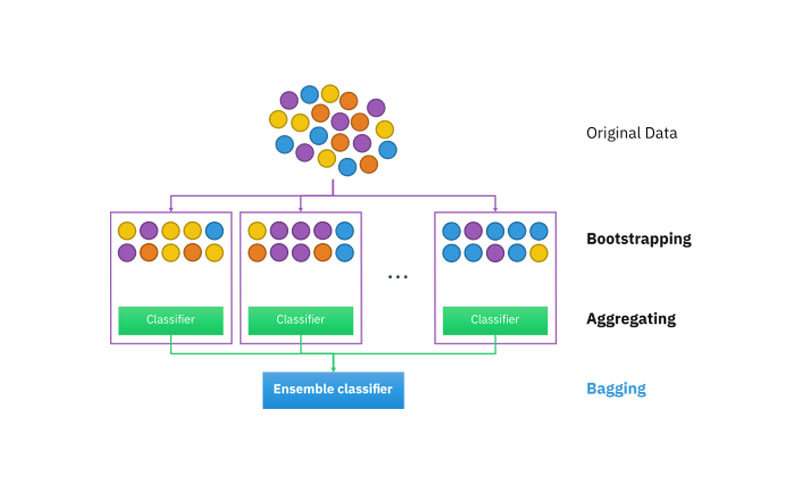

1. Bagging (Bootstrap Aggregating): In Bagging, multiple models are trained on different subsets of the training data, and then their predictions are combined through a voting mechanism. This helps to reduce overfitting and improve the stability of the model.

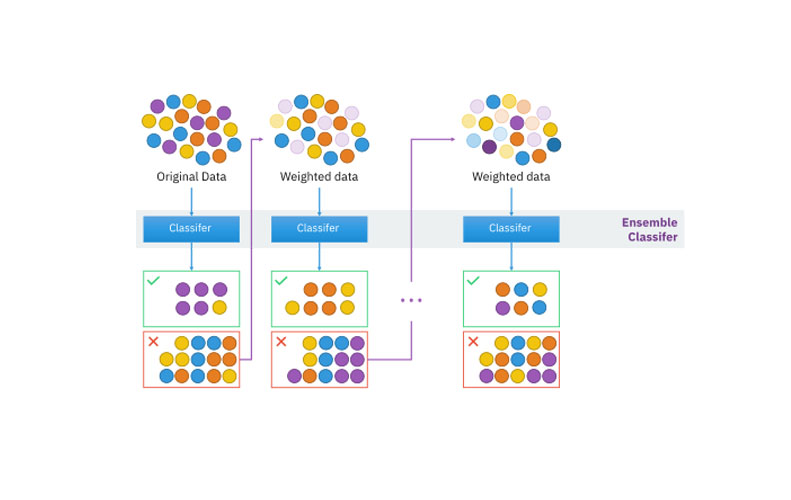

2. Boosting: In Boosting, multiple weak learners are combined to create a strong learner. Each weak learner is trained sequentially, with each new model focusing on the errors of the previous ones. This iterative process helps to improve the overall performance of the model.

2. Boosting: In Boosting, multiple weak learners are combined to create a strong learner. Each weak learner is trained sequentially, with each new model focusing on the errors of the previous ones. This iterative process helps to improve the overall performance of the model.

3. Stacking: In Stacking, multiple models are trained on the same data, and their predictions are used as input features for a meta-model. The meta-model then combines the predictions of the base models to make the final prediction.

How does Ensemble Learning work?

The key idea behind Ensemble Learning is that by combining multiple models, the strengths of individual models can be leveraged while mitigating their weaknesses.

This is often referred to as the “wisdom of the crowd” effect, where the collective intelligence of multiple models is greater than that of any single model.

Ensemble Learning works by training multiple models on the same data and then combining their predictions in a way that optimizes performance.

This can be done through methods such as averaging, voting, or using a meta-model to combine the predictions of base models.

One of the main advantages of Ensemble Learning is that it helps to reduce overfitting and increase the generalization ability of the model.

By combining diverse models, Ensemble Learning can capture different aspects of the data and make more robust predictions.

Applications of EL

EL has been successfully applied to a wide range of fields and problems, including:

1. Image and speech recognition: To improve the accuracy of image and speech recognition systems. By combining multiple models, Ensemble Learning can help to reduce errors and improve the overall performance of these systems.

2. Fraud detection: In fraud detection systems to identify suspicious activities and transactions. By combining multiple models, Ensemble Learning can help to distinguish between genuine and fraudulent behavior more effectively.

3. Financial forecasting: In financial forecasting to predict stock prices, market trends, and other financial indicators. By combining multiple models, Ensemble Learning can provide more reliable predictions and insights for investment decisions.

4. Healthcare: In healthcare for disease diagnosis, patient monitoring, and treatment planning. By combining multiple models, Ensemble Learning can help to improve the accuracy of medical predictions and enhance patient outcomes.

With its wide range of applications and proven effectiveness, Ensemble Learning continues to be a popular and valuable tool in the field of machine learning.

Images credit:

Bagging Wikimedia Commons | License details

Boosting Wikimedia Commons | License details