What is Quantization in Machine Learning?

Table of Contents

In machine learning, Quantization is a method used to make large computer models smaller without losing much accuracy. This helps these models, like LLM, text-to-image or text-to-video, work faster and better on phones and other devices with limited power. By shrinking the models, they need less space and energy to run. Example os this: Stable Diffusion, ControlNet and Llama 2.

What is Quantization in Machine Learning?

In machine learning, quantizing a model is a process of reducing the precision of numerical representations. It involves converting continuous values (typically 32-bit floating-point numbers) into lower precision formats (e.g., 8-bit integers).

This technology is applicable across various fields, including signal processing, image compression, and speech recognition.

The primary objective of this process is to decrease model size and computational requirements without significantly compromising accuracy.

The Importance

This technology offers several advantages:

- Reduced model size: Smaller models require less storage space.

- Faster inference: Lower precision computations are generally quicker.

- Lower power consumption: Reduced computational intensity leads to decreased energy use.

However, the process of quantize a model also presents challenges:

- Accuracy loss: Lower precision can impact model performance.

- Quantization error: Discretization of values can introduce errors.

Different Types

The process of quantizing a model encompasses several methods, each suited to different applications and model architectures:

Vector Quantization

This type involves representing a set of data points (vectors) with a smaller set of codebook vectors. Key aspects include:

- Codebook generation: Creating a representative set of codebook vectors.

- Encoding: Assigning data points to the nearest codebook vector.

- Decoding: Reconstructing data from codebook vectors. Applications span image compression, speech recognition, and data clustering.

Product Quantization

This type extends vector quantization by decomposing high-dimensional vectors into lower-dimensional subspaces. This approach reduces computational complexity and memory footprint, making it suitable for large-scale nearest neighbor search problems.

LLM Quantization

Large Language Models (LLMs) benefit from this technology due to their immense size. Quantizing LLMs reduces model storage, accelerates inference, and decreases computational costs. A specific instance is VLLM quantization, focusing on optimizing LLMs for deployment on resource-constrained devices.

The Techniques

The process of quantizing a model encompasses various methods to reduce numerical precision. Primary techniques include:

Post-Training Quantization

This method applies quantization to a pre-trained model without retraining.

- Static: Determines a fixed scale and zero point for each tensor.

- Dynamic: Calculates scale and zero point at runtime.

Weight Quantization

Focuses on reducing the precision of model weights. This technique is often combined with the following technique for optimal results.

Activation Quantization

Reduces the precision of intermediate activations during model inference. This method can significantly impact model performance if not carefully implemented.

Mixed Precision Quantization

Combines different levels for weights and activations. This approach offers flexibility in balancing accuracy and efficiency.

Some Frameworks and Tools

Several frameworks and tools facilitate the process of quantizing a model:

- TensorFlow Lite: This tool offers support for mobile and embedded devices.

- PyTorch: PyTorch provides APIs for both post-training and quantization-aware training.

- ONNX Runtime: ONNX Runtime quantizes models converted to the ONNX format.

These tools vary in features, ease of use, and supported hardware platforms. Careful consideration is necessary to select the optimal framework for specific use cases.

Model Performance

Simplificating a model will inevitably impact its accuracy. Factors influencing this impact include:

- Quantization bitwidth: Lower bitwidths generally lead to greater accuracy loss.

- Quantization technique: The chosen method affects the degree of accuracy degradation.

- Model architecture: Certain architectures are more susceptible to errors.

To mitigate accuracy loss, quantization-aware training can be employed. This technique involves training the model with simulated quantization during the training process.

Case Studies

Real-world applications demonstrate the efficacy of this technology:

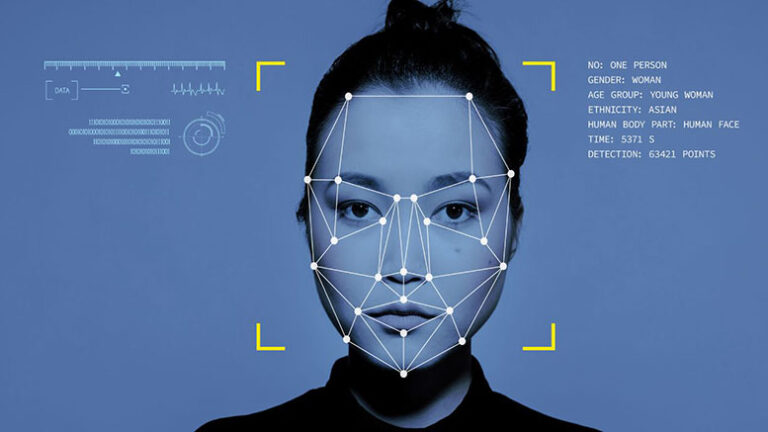

- Mobile and Edge Devices: Crucial for deploying models on resource-constrained platforms. Reduced model size and computational requirements enable efficient inference on smartphones, IoT devices, and other edge computing hardware.

- Deployment in Resource-Constrained Environments: Essential for scenarios with limited memory, processing power, and energy. It allows for the deployment of complex models in areas such as autonomous vehicles, medical devices, and robotics.

Let’s analyze a perfect example:

Mixtral 8x7b quantized vs Mistral

Mixtral 8x7b quantized represents an optimized version of the Mistral language model.

Key Differences

Model Size and Efficiency:

- Mixtral 8x7b: While the exact details of the “8x” component are proprietary, it’s likely designed to improve computational efficiency or performance compared to a standard 7 billion parameter model.

- Mixtral 8x7b Quantized: This version takes the Mixtral 8x7b model and applies quantization to further reduce its size and increase inference speed. It’s essentially a compressed version of the original model.

Performance:

- Mixtral 8x7b: Generally offers better performance than Mistral 7b due to its optimized architecture.

- Mixtral 8x7b Quantized: While faster and smaller, the optimization process often comes at the cost of some accuracy reduction compared to the full-precision model.

However, the trade-off between size, speed, and accuracy can be managed through careful optimization techniques.

Use Cases:

- Mixtral 8x7b: Suitable for various natural language processing tasks where performance and accuracy are prioritized.

- Mixtral 8x7b Quantized: Ideal for resource-constrained environments or real-time applications where model size and speed are critical, such as mobile devices or embedded systems.

Table 1: Comparison of Mixtral 8x7b quantized Vs Mistral

| Feature | Mistral | Mixtral 8x7b Quantized |

|---|---|---|

| Model Size | Large | Smaller |

| Inference Speed | Slower | Faster |

| Accuracy | High | Comparable |

While quantization can lead to performance gains, it is essential to evaluate the trade-off between model size, speed, and accuracy for specific applications.

Future Trends

Research in quantized models continues to advance. In the future we will see:

- Exploration of lower bitwidths: Investigating the potential of extremely low-precision quantization (e.g., 4-bit or 2-bit) while maintaining acceptable accuracy.

- Development of novel techniques: Exploring new approaches to address the challenges such as non-uniform and adaptive quantization.

- Integration with other optimization methods: Combining this technology with pruning, knowledge distillation, and other techniques to achieve further model compression and acceleration.

Optimizing AI models will significantly impact the AI landscape by enabling the deployment of larger and more complex models on a wider range of devices and platforms.

Frequently Asked Questions

What is the difference between quantization and compression?

They’re both related but different. Quantization reduces the number of values in data, while compression reduces the size of data by removing unnecessary information.

Does it always make models less accurate?

Not always. While some accuracy loss is common, careful methods and tools can minimize this.

Can I use it for any type of machine learning model?

Yes, it can be applied to many types of models, but the best method depends on the model’s specific characteristics.

Is quantization new?

No, it has been used in various fields for a long time. Its application to machine learning is a more recent development.

Will it replace traditional machine learning?

No, it is a tool to improve existing models, not replace them.