Chat With RTX: Taking Chatbots to the Next Level with NVIDIA

Table of Contents

Chatbots have become an integral part of our daily lives, assisting millions of people worldwide. These AI-powered tools have revolutionized the way we interact with technology, providing quick and contextually relevant information.

NVIDIA, a leader in artificial intelligence computing, has taken chatbot technology to new heights with their latest innovation, Chat With RTX. This groundbreaking application brings the power of personalized chatbots to NVIDIA RTX AI PCs, offering a seamless and efficient user experience.

Introducing Chat With RTX

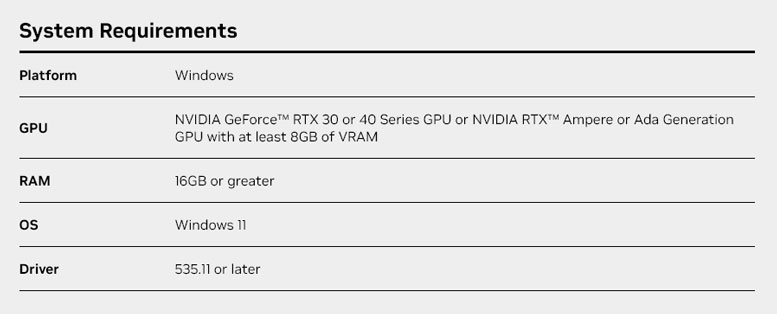

Chat With RTX is a tech demo developed by NVIDIA that enables users to personalize their chatbot experience with their own content. What sets this application apart is its ability to run locally on Windows PCs powered by NVIDIA GeForce RTX 30 Series GPUs or higher. By leveraging the immense computing power of these GPUs, Chat With RTX delivers fast and custom generative AI capabilities right at your fingertips.

To get started with Chat With RTX, users can simply download the application for free on their website. However, it’s important to note that a minimum requirement for the GPU is a GeForce RTX 30 Series with at least 8GB of video random access memory (VRAM). Additionally, the application requires Windows 10 or 11, as well as the latest NVIDIA GPU drivers.

The Power of Retrieval-Augmented Generation (RAG)

Chat With RTX harnesses the power of retrieval-augmented generation (RAG), NVIDIA TensorRT-LLM software, and NVIDIA RTX acceleration to provide a truly immersive chatbot experience. This innovative approach allows users to connect their local files on their PC as a dataset to an open-source large language model like Mistral or Llama 2.

Gone are the days of searching through endless notes or saved content. With Chat With RTX, users can simply type their queries and receive quick and contextually relevant answers. For example, if you’re wondering about the restaurant your partner recommended during your trip to Las Vegas, simply ask Chat With RTX, and it will scan your local files to provide the answer with all the necessary context.

Seamlessly Integrating Various File Formats

Chat With RTX supports a wide range of file formats, including .txt, .pdf, .doc/.docx, and .xml. This versatility allows users to point the application to the folder containing these files, and within seconds, the tool will load them into its library. This feature ensures that users can access their information effortlessly and without any hassle.

But that’s not all. Chat With RTX goes beyond local files and also allows users to include information from YouTube videos and playlists. By simply adding a video URL to Chat With RTX, users can integrate this knowledge into their chatbot for even more contextual queries.

Whether you’re looking for travel recommendations based on your favorite influencer videos or quick tutorials and how-tos from top educational resources, Chat With RTX has got you covered.

Keeping Data Local and Secure

One of the key advantages of Chat With RTX is that it runs locally on Windows RTX PCs and workstations, ensuring the privacy and security of the user’s data. Unlike cloud-based language model (LLM) services, Chat With RTX enables users to process sensitive data on their local PC without the need to share it with a third party or have an internet connection.

This level of control and security is particularly important for users who handle confidential information or have strict data privacy requirements.

Developing LLM-Based Applications with RTX

Chat With RTX not only showcases the immense potential of accelerating large language models (LLMs) with RTX GPUs but also offers developers the opportunity to build their own RAG-based applications. NVIDIA provides a reference project, TensorRT-LLM RAG, available on GitHub, which developers can utilize to develop and deploy their own LLM-based applications for RTX, accelerated by TensorRT-LLM.

To further encourage innovation in this space, NVIDIA has launched the NVIDIA Generative AI on NVIDIA RTX developer contest. Developers can enter the contest with their generative AI-powered Windows apps or plug-ins for a chance to win exciting prizes, including a GeForce RTX 4090 GPU and a full, in-person conference pass to NVIDIA GTC.

Final Thoughts

With the introduction of Chat With RTX, NVIDIA has once again pushed the boundaries of what’s possible with AI technology. This innovative application brings personalized chatbot experiences to NVIDIA RTX AI PCs, allowing users to harness the power of generative AI right from their desktops.

Whether you’re a developer looking to build your own LLM-based applications or an end-user seeking quick and contextually relevant information, Chat With RTX is set to revolutionize the way we interact with chatbots.