AI Safety

Artificial Intelligence (AI) has the potential to revolutionize industries, improve efficiency, and enhance our quality of life. However, with great power comes great responsibility. As AI becomes more advanced and integrated into various aspects of society, ensuring its safety and ethical use is critical.

In this article, we will explore the importance of AI safety and the measures that can be taken to prevent potential risks and harm.

The Risks of AI

AI has the ability to make decisions and take actions without human intervention, which can lead to various risks if not properly managed.

One of the main concerns is the potential for AI systems to make biased or discriminatory decisions. For example, if an AI algorithm used in hiring processes is trained on biased data, it could perpetuate existing inequalities and discrimination.

Another risk is the possibility of AI systems making mistakes or errors that could have serious consequences. For instance, an autonomous vehicle that makes a wrong decision on the road could result in accidents and harm to human lives.

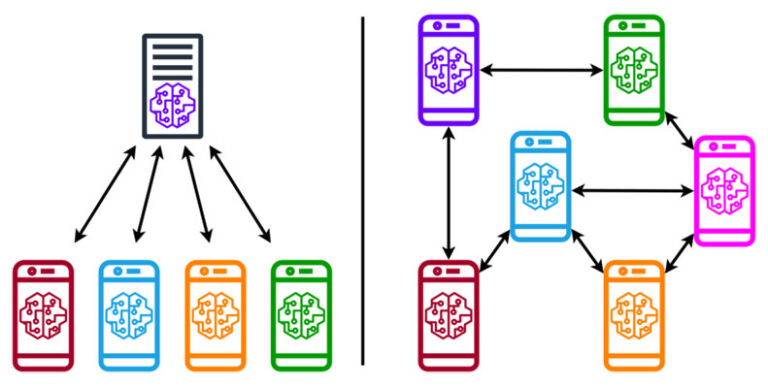

Additionally, there is the concern of AI systems being hacked or manipulated by malicious actors for nefarious purposes.

AI Safety Measures

To address these risks and ensure the safe and ethical use of AI, various measures can be taken. One of the key steps is to develop AI systems that are transparent and explainable.

This means that AI algorithms should be designed in a way that their decisions and reasoning can be understood by humans.

This transparency can help identify and correct biases, errors, and vulnerabilities in the system.

Another important measure is to ensure the accountability of AI systems and their developers.

Clear guidelines and regulations should be put in place to hold individuals and organizations responsible for the actions and decisions made by AI systems.

This can help prevent the misuse of AI technology and ensure that it is used for the benefit of society.

Furthermore, it is essential to prioritize the safety and well-being of individuals when designing and deploying AI systems.

This includes conducting thorough risk assessments, testing for potential harms, and implementing safeguards to mitigate risks.

For example, AI systems used in healthcare should be rigorously tested to ensure their accuracy and reliability in diagnosing and treating patients.

Ethical Considerations in AI Safety

In addition to technical measures, ethical considerations play a crucial role in ensuring the safety of AI.

Ethical guidelines and frameworks can help guide the development and deployment of AI systems in a way that aligns with societal values and norms.

For instance, AI systems should be designed to respect human rights, privacy, and autonomy.

It is also important to consider the impact of AI on job displacement and economic inequality. As AI technology advances, there is a risk that certain jobs may be automated, leading to unemployment and economic hardship for some individuals.

Policies and programs should be put in place to support workers transitioning to new roles and industries affected by AI.

Moreover, the issue of AI alignment – ensuring that AI systems act in accordance with human values and goals – is a critical ethical concern.

Research in AI ethics and alignment is essential to develop AI systems that are aligned with human values and preferences.

Conclusion

AI safety is a multifaceted and complex issue that requires a collaborative effort from researchers, policymakers, industry leaders, and society as a whole.

By addressing the risks and ethical considerations associated with AI, we can harness the potential of this technology for the greater good and ensure that it benefits humanity.

Through transparency, accountability, and ethical guidelines, we can pave the way for a future where AI is used responsibly and ethically.