Generative Pre-trained Transformer (GPT)

What is Generative Pre-trained Transformer (GPT)?

Generative Pre-trained Transformer (GPT): Revolutionizing Natural Language Processing

In recent years, the field of natural language processing (NLP) has seen tremendous advancements thanks to the development of powerful machine learning models like the Generative Pre-trained Transformer (GPT). GPT is a type of deep learning model that has been trained on large amounts of text data to generate human-like text. In this article, we will explore the capabilities of GPT and how it is revolutionizing the way we interact with language.

What is Generative Pre-trained Transformer (GPT)?

Generative Pre-trained Transformer, or GPT for short, is a type of transformer-based deep learning model developed by OpenAI. The transformer architecture, which was first introduced in a research paper by Vaswani et al. in 2017, has become the go-to architecture for many NLP tasks due to its ability to efficiently handle long-range dependencies in text.

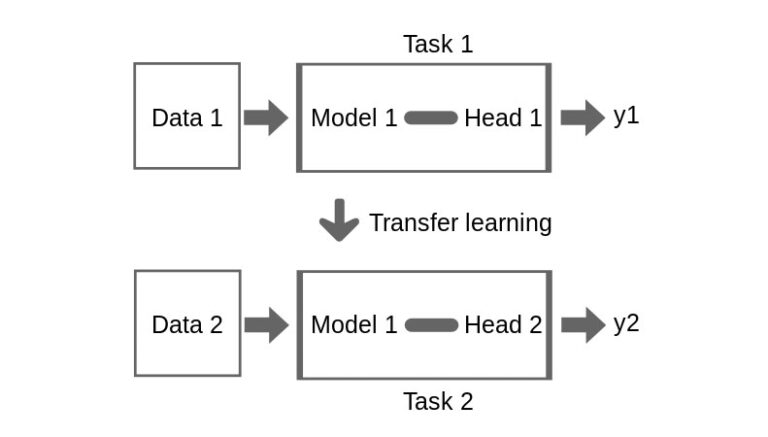

GPT takes the transformer architecture to the next level by pre-training a large neural network on vast amounts of text data. During pre-training, the model learns to predict the next word in a sequence of text, which helps it develop a strong understanding of language patterns and semantics. Once pre-training is complete, the model can be fine-tuned on specific tasks like language translation, text generation, and sentiment analysis.

How does GPT work?

At its core, GPT is a language model that generates text by predicting the next word in a sequence based on the context provided by the previous words. The model consists of multiple layers of self-attention mechanisms that allow it to capture long-range dependencies in text and generate coherent and contextually relevant responses.

During pre-training, GPT is fed a large corpus of text data, such as books, articles, and websites, to learn the statistical patterns and relationships between words. The model is trained to predict the next word in a sequence given the previous words, which allows it to generate fluent and coherent text. This process helps GPT develop a deep understanding of grammar, syntax, and semantics, making it capable of generating human-like text.

Applications of GPT

GPT has a wide range of applications across various industries, including customer service, content generation, and language translation. Some of the most common applications of GPT include:

1. Text generation: GPT can be used to generate human-like text for chatbots, virtual assistants, and content creation platforms. The model can generate coherent and contextually relevant responses to user queries, making it a valuable tool for improving user engagement and customer satisfaction.

2. Language translation: GPT can be fine-tuned to perform language translation tasks by training it on parallel text data in multiple languages. The model can generate accurate translations between languages, making it a useful tool for breaking down language barriers and improving cross-cultural communication.

3. Sentiment analysis: GPT can be used to analyze and classify the sentiment of text data, such as social media posts, customer reviews, and news articles. The model can accurately identify positive, negative, and neutral sentiments in text, helping businesses understand customer feedback and sentiment trends.

Challenges and Future Directions

While GPT has made significant advancements in the field of NLP, there are still some challenges that need to be addressed. One of the main challenges is the model’s tendency to generate biased or inappropriate text due to the biases present in the training data. Researchers are working on developing techniques to mitigate bias in language models and ensure that the generated text is fair and unbiased.

In the future, we can expect to see even more powerful and versatile language models based on the transformer architecture, such as GPT-3 and GPT-4. These models will continue to push the boundaries of what is possible in NLP and help us create more intelligent and human-like AI systems.

In conclusion, Generative Pre-trained Transformer (GPT) is a groundbreaking deep learning model that is revolutionizing the field of natural language processing. With its ability to generate human-like text and understand complex language patterns, GPT is paving the way for more intelligent and versatile AI systems. As researchers continue to improve and refine the model, we can expect to see even more exciting applications and advancements in the field of NLP.